Regular Issue, Vol. 10 N. 2 (2021), 123-136

eISSN: 2255-2863

DOI: https://doi.org/10.14201/ADCAIJ2021102123136

|

ADCAIJ: Advances in Distributed Computing and Artificial Intelligence Journal

Regular Issue, Vol. 10 N. 2 (2021), 123-136 eISSN: 2255-2863 DOI: https://doi.org/10.14201/ADCAIJ2021102123136 |

Machine Learning-Based Hand Gesture Recognition via EMG Data

Zehra KARAPINAR SENTURKa and Melahat Sevgul BAKAYb

a Duzce University, Engineering Faculty, Department of Computer Engineering, Duzce, Turkey

b Duzce University, Engineering Faculty, Department of Biomedical Engineering, Duzce, Turkey

zehrakarapinar@duzce.edu.tr, melehatbakay@duzce.edu.tr

ABSTRACT

Gestures are one of the most important agents for human-computer interaction. They play a mediator role between human intention and the control of machines. Electromyography (EMG) pattern recognition has been studied for gesture recognition for years to control of prostheses and rehabilitation systems. EMG data gives information about the electrical activity related to muscles. It is obtained from the arm and helps to understand hand gestures. For this work, hand gesture data taken from UCI2019 EMG dataset obtained from myo Thalmic armband were classified with six different machine learning algorithms. Artificial Neural Network (ANN), Support Vector Machine (SVM), k-Nearest Neighbor (k-NN), Naïve Bayes (NB), Decision Tree (DT) and Random Forest (RF) methods were applied for comparison based on several performance metrics which are accuracy, precision, sensitivity, specificity, classification error, kappa, root mean squared error (RMSE) and correlation. The data belongs to seven hand gestures. 700 samples from 7 classes (100 samples per group) were used in the experiments. Splitting ratio in the classification was 0.8-0.2, i.e. 80% of the samples were used in training and 20% of data were used in the testing phase of the classifier. NB was found to be the best among other methods because of high accuracy. Classification accuracy varies between 97.52% to 100% for each gesture. Considering the results of the performance metrics, it can be said that this study recognizes and classifies seven hand gestures successfully in comparison with the literature. The proposed method can easily be used for human-machine interaction and smart device controlling like prosthesis, wheelchair, and smart entertainment applications.

KEYWORDS

EMG, myo armband, hand gesture recognition, machine learning

1. Introduction

Physiological signals provide a variety of information about the human body. Diagnosis, monitoring and treatment of diseases are possible with the acquisition of physiological signals correctly (Faust and Bairy, 2012). These signals are obtained by using sensors that are invasive or non-invasive. Sensors can be placed or implanted into the skin. Obtained signals are transferred to smart devices like mobile phones or computers. An area where the sensor is placed is chosen according to the application types. In other words, determinations of the characteristics of the physiological signals are related to the location of the sensors (Van Drongelen, 2018).

Sensors or electrodes placed on the muscle give information about the electrical activity of the muscles which is called EMG. Muscle activities can be monitored for several applications such as human-computer interactions, diagnosis of diseases and biomechanics of human movements. Clinical diagnosis, controlling devices including prosthesis and electrical stimulation can be realized owing to EMG. Skin electrodes for EMG are most popular in recent years because of being cheap, non-invasive and easy to use. Neuromuscular activities are recognized by utilizing skin electrodes before the actual movement (Vaiman, 2007).

EMG signals obtained from surface electrodes also help to classify hand gestures. Gestures support and make easy communication with others by using body parts such as face and hand. The use of gestures varies in different areas such as in-vehicle interaction (Molchanov et al., 2015), virtual-reality (Sagayam and Hemanth, 2017), control of prosthesis (Guo et al., 2017), augmented reality (Liang et al., 2015), teleoperation (Mi et al., 2016) and sign language (Zhang et al., 2011).

The cooperative activity of muscles generates diverse hand gestures and they can be learned and predicted by machine-learning algorithms after training. According to the devices used like multichannel-based wearable devices (i.e. Myo Armband), collected EMG data has some advantages in biomedical applications due to the improvement of the recognition of multiple hand gestures significantly when compared to single-channel EMG devices (Englehart et al., 2001).

Bian et al. (Bian et al. (2017) used surface EMG (sEMG) signals to determine motions for artificial limbs. They intended to satisfy the need for rehabilitation robots in hospitals. A rehabilitation robot can detect the intention of patients and assists them for basic daily life activities. The researchers showed that SVM outperforms RF, NB and linear discriminant analysis (LDA) methods with 80% accuracy in user independent experiments. They used 5 subjects and made the subjects perform 8 movements with 50 repeats. Donovan et al. (Donovan et al. (2017) proposed a C language based gesture recognition system using myo armband. They obtained similar classification results (about 92% accuracy) for SVM and LDA.

Different methods were also used for hand gesture recognition like convolutional neural networks (CNN) (Wei et al., 2019; Faust et al., 2018), ANN (Eshitha and Jose, 2018) and recurrent neural networks (RNN) by comparing other classification methods like feed-forward neural network (FFNN), long short-term memory network (LSTM) and gated recurrent unit (GRU) (Simão et al., 2019a). Xie et al. (Xie et al. (2018) proposed a hybrid deep learning model with both convolutional and recurrent layers for the improved classification performance. Simao et al. (Simao et al. (2019b) suggested a novel method for gesture classification by use of ANN trained in the generative adversarial network (GAN) framework. Normalized EMG data was separated into three various hand gestures with machine learning algorithms including k-NN, LDA, NB, RF, and SVM (Wahid et al., 2018). Kim et al. (2018) developed a deep learning model for arm gesture recognition using a wrist band for nine defined motions to be used for smart TV applications. Jiang et al. (Jiang et al. (2020) classified hand gestures for American Sign language digits 0 to 9. They showed that including FMG (Force MyoGraphy) to an EMG-based recognition system improves the performance of muscle activity recognition.

ML algorithms are frequently used for the classification of different hand gestures. The aim of this study is to classify seven hand gestures obtained from myo Thalmic bracelet. We used a publicly available and very recent dataset which contains signal data of 36 subjects and hundreds of repeats for each hand gesture. Six different ML algorithms were used and compared for the task. They are ANN, SVM, k-NN, NB, RF and DT. Performance metrics were accuracy, sensitivity, precision and mean square error. The performances of the methods were compared with other classification methods in the literature.

The rest of the paper is organized as follows: Section 2 gives the details of the dataset and the methods, Section 3 analyzes the results of experiments, the results are discussed in Section 4 and the paper is concluded with conclusions and an outlook into future work in Section 5.

2. Materials and Methods

2.1. EMG Data Set

UCI machine learning repository was used for EMG data in order to perform a gesture recognition application. The data set was obtained with myo Thalmic armband from the forearm and the data was transferred via bluetooth to PC. The myo armband is manufactured by Thalmic Labs. In Figure 1, myo armband and its placement is shown. It is made of 8 equidistant sensors. These 8 EMG channels of myo Thalmic bracelet were used as input sets of the classifiers.

Figure 1: Myo armband and its placement on the forearm (Wahid et al., 2018)

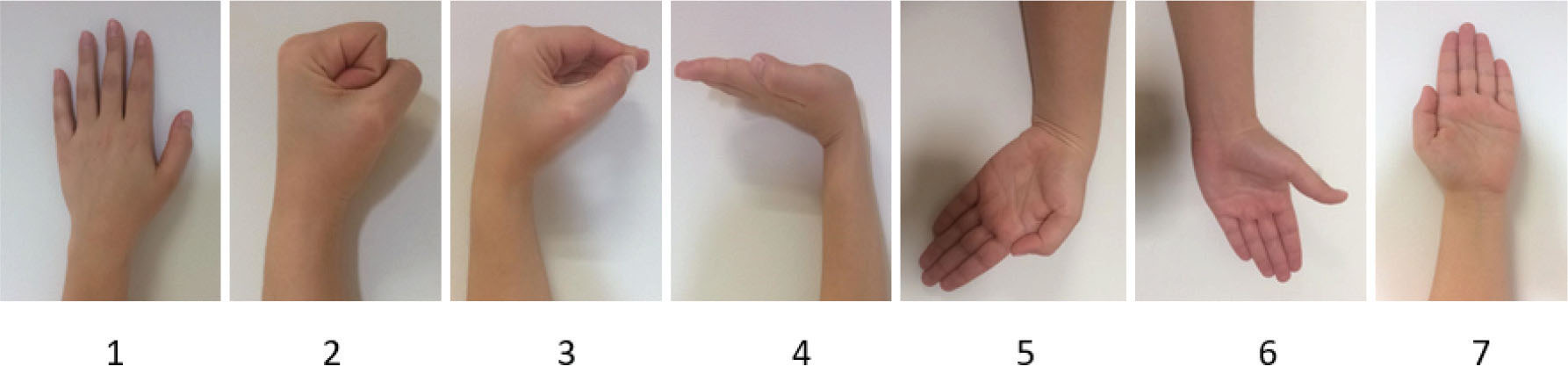

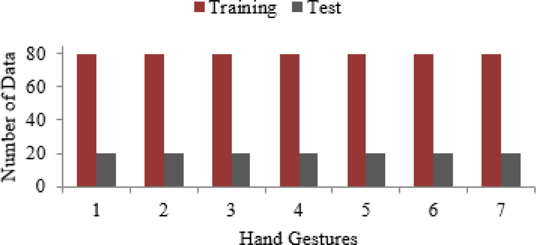

Data were collected from 36 subjects performing seven basic gestures. These gestures were numbered from 1 to 7 which are hand at rest, hand clenched in a fist, wrist flexion, wrist extension, radial deviation, ulnar deviations and extended palm, respectively as shown in Figure 2. These gestures constitute the output set, i.e. class labels, of the classifiers. Random 100 samples from each class were selected from the dataset for ease of computation and therefore 700 EMG data were used in the experiments. 80% of these samples were used in the training and the rest is used in the test sets. Figure 3 shows the number of samples with respect to seven hand gesture classes.

Figure 2: Representation of 7 hand gestures

Figure 3: Number of training and test data according to the hand gesture class

2.2. Data Normalization

Data normalization is an important preprocessing step in classification, in which scaling or transformation of data is done in order to make the contribution of each feature equal (Singh and Singh, 2019). It is important in machine learning algorithms since it also reduces the computational load by dealing with smaller numbers in the computations besides making the features have an equal contribution. (Jayalakshmi and Santhakumaran, 2011) and (Acharya et al., 2011) proved that data normalization improves the performance of medical data classification. Based on these inferences we applied data normalization in this study. EMG data is scaled via min-max normalization in the range of –1 and 1. Transformation of data to the range to between lower limit (a) to the upper limit (b) was done according to Equation 1.

2.3. Machine Learning-Based Classification

ML is a type of artificial intelligence (AI) that creates a mathematical model depending on sample data called training data. This system firstly learns and then improves through the experiences. Classification, regression, prediction or decision can be made through ML algorithms based on training processes and data. Six different

ML algorithms were evaluated in this paper for the classification of hand gestures from EMG data and the methods consist of ANN, SVM, k-NN, NB, RF and DT. All methods were applied by using Rapid Miner Studio Version 9.5.

ANN is a logical model for reviewing the main functions of the brain such as the ability to derive new information through learning, remembering, generalizing by mimicking the working mechanisms of the human brain. It is the synthetic structure that mimics biological neural networks (Faust et al., 2018). It can be used in many areas such as classification, prediction, optimization, regression and pattern recognition. ANN has 3 main parts which are architectural structure including input, hidden layers and output, training algorithms and activation function. In this study, the dataset has 8 attributes and therefore there are 8 input neurons in the designed network. Since the proposed model will classify seven hand gestures there will be seven output neurons. One hidden layer is utilized and it has 20 neurons. Other parameters used are learning rate as 0.06, momentum as 0.9, training cycles as 600, error epsilon as 1E-4 and activation function is the sigmoid function.

SVM is one of the supervised learning algorithms that minimize structural risk and includes statistical learning theory. SVM is preferred especially in the areas of face, text and handwriting recognition, medical predictions, time series, classifications and regression. SVM includes several Kernels which are linear, polynomial, radial, exponential, sigmoid and hybrid functions. For hand gesture classification, radial basis function is used as kernel, [c,γ] pair is determined as [0,2] and the maximum iteration number is determined as 100000 after many repeated experiments.

The k-NN algorithm is a classification and regression algorithm where neighbors have contributions according to their distance. Each neighbor has a weight in proportion to NB classifier is 1/d where d is the distance to the neighbor. The most important parameter of k-NN is k value which is defined as the number of neighbors. In this paper, k is selected as 5 and Euclidean distance is used.

NB classifier is based on a probabilistic approach. NB classifiers can also be thought of as a Bayesian network composed of independent attributes, conditionally. Each attribute is closely related to the concept to be learned. Discretization, data mining, identification and classification are several areas that NB method used. For this classification, the parameters of NB are as follows: the number of kernels is 12, minimum bandwidth is 0.01 and the estimation mode is greedy.

The basic idea of DT is dividing data into groups repeatedly by a clustering algorithm. Clustering is performed to make all group elements at the same class label. DT algorithm can be applied in data mining, economy, medicine for classification and regression. DT criterion is preferred as information gain. Other parameters like maximal depth, minimal leaf size, minimal gain and minimal size for the split are chosen as 10, 4, 0.1, and 4, respectively.

RF algorithm is a sort of community learning method that aims to increase the classification value by generating multiple DTs during the classification process. The DT created individually is gathered in order to form a decision forest. RF is preferred in the areas of astronomy, biomedical, finance, health and physics. Classification and regression problems can be solved by using RF. Selected parameters of the algorithm are the number of trees as 100, the criterion used as information gain and maximal depth as 10 for this application.

To obtain more accurate results, different parameters were tried. These parameters were the best combinations decided at the end of the trials for the classification of hand gestures.

2.4. Performance Metrics

Eight performance metrics shown in Table 1 were used to compare the success of different ML algorithms in the present investigation. These metrics are accuracy, precision, sensitivity, specificity, classification error, kappa, root mean squared error and correlation. Accuracy is an important criterion that shows the fraction of the number of correct predictions to the total number of predictions shown in Equation 2.

Table 1: Evaluation of metrics with respect to ML methods

|

ANN |

SVM |

k-NN |

NB |

DT |

RF |

Accuracy (%) |

91.43 |

96.43 |

92.14 |

96.43 |

92.86 |

96.43 |

Precision (%) |

92.63 |

97.62 |

92.35 |

96.49 |

93.35 |

96.43 |

Sensitivity (%) |

91.43 |

96.43 |

92.14 |

96.43 |

92.86 |

96.43 |

Specificity (%) |

98.57 |

99.41 |

98.74 |

99.29 |

98.81 |

99.41 |

Classification Error (%) |

8.57 |

3.57 |

7.86 |

3.57 |

7.14 |

3.57 |

Kappa |

0.900 |

0.958 |

0.908 |

0.958 |

0.917 |

0.958 |

Root Mean Squared Error |

0.302 |

0.198 |

0.265 |

0.189 |

0.269 |

0.220 |

Correlation |

0.934 |

0.956 |

0.920 |

0.957 |

0.921 |

0.988 |

TP in Equation 2 stands for true positives, FP is false positive, TN is a true negative, and FN is a false negative. Precision and sensitivity indicate the ratio that each class is correctly classified. Equation 3 and Equation 4 show the calculation of precision and sensitivity, respectively. In addition, specificity can be described as a true negative rate and it is expressed as shown in Equation 5.

Classification error is a percentage parameter that indicates the rate of errors in each class determination. Equation 6 is the calculation of percentage classification error depending on accuracy.

Measurement of the reliability of the relative deal between two parameters gives the Kappa value which is a type of statistical method. It is desirable that the Kappa value is close to 1, i.e. the closer it is to 1, the more accurate classification is made. Equation 7 shows the calculation of Kappa where Pr(a) is the total ratio of the observed observations for the two evaluators and Pr(e) is the probability of a match occurring by chance.

Root mean squared error (RMSE) indicates the differences between the predicted value obtained from algorithms and observed/real value. In the Equation 8, n is the number of observations, yi and xi represent the predicted and measured data, respectively. Shortly, it gives the standard deviation of the prediction errors.

Correlation shows the degree of the linear relationship between two variables and it is expressed by the Equation 9 below. cov refers to covariance, corr refers to correlation, X and Y are random variables, µX and µY are the expected values of X and Y, σX and σY are standard deviations of X and Y and E is the expected value operator in Equation 9.

3. Experimental Results

ML algorithms are the most frequently used methods for classification in various areas. Some of the hand gestures were defined to classify with different ML algorithms. EMG dataset for hand gesture data was obtained from the UCI Machine Learning Repository. The performance parameters including accuracy, precision, sensitivity, specificity, classification error, kappa, RMSE and correlation were evaluated and compared to decide the best method for classification of the seven hand gestures.

In this study, we conducted the experiments by the following algorithmic steps:

1. Establish a classification model for the problem. Model= {ANN, SVM, k-NN, NB, DT, RF}

2. Find the optimal parameters for the corresponding model.

3. Execute the model.

4. Record performance metrics for the model.

5. Repeat 1-4 until all models are evaluated.

6. Examine the best model in depth.

7. Obtain 2×2 confusion matrices for the best classification to see the performance with respect to each gesture class.

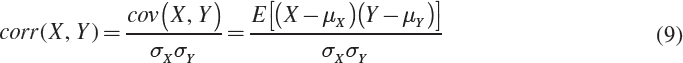

Flow chart of the proposed method is shown in Figure 4. Table 1 shows the results of the performance parameters for distinct ML algorithms (classification models mentioned above) to classify seven hand gestures. It includes the overall accuracy, which is obtained from the 7×7 confusion matrix, of the detection of seven hand gestures. Some of the hand gestures were detected without error, but some of them were detected with some error. According to accuracy (96.43%), sensitivity (96.43%), classification error (3.57%) and kappa value (0.958), SVM, NB and RF give equal and the best results. SVM enables the best result considering precision being 97.62%. The RMSE value was obtained as the lowest in the NB classification method. Finally, the best correlation value was provided in the RF method with a value of 0.988.

Figure 4: Flowchart of the proposed gesture detection method

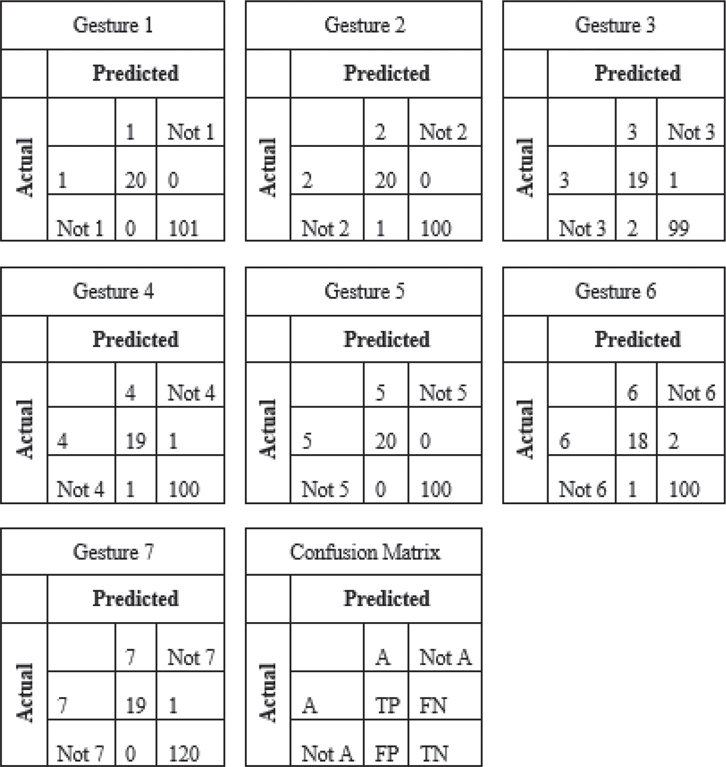

To see the performance of the classifier for distinct hand gestures, we obtained 2×2 confusion matrices of the best classifier. To determine the best method, we considered RMSE value which indicates the error between the true value and the predicted value besides other metrics. The closer the value of RMSE to zero, the more accurate classification results are provided. NB method supplies the best and minimum result with 0.189 RMSE value. Since the RMSE of NB is the least and the other metrics are the highest, we determined the best classifier as NB. From the 7×7 confusion matrix given in Table 2, we constituted 2×2 matrices for each gesture class. These small confusion matrices demonstrate the performance of the classifier with respect to distinct hand gesture classes. The purpose of doing this was to see that for which gestures the classifier is unsuccessful.

Table 2: Confusion matrix of NB

|

True 1 |

True 2 |

True 3 |

True 4 |

True 5 |

True 6 |

True 7 |

Pred. 1 |

20 |

0 |

0 |

0 |

0 |

0 |

0 |

Pred. 2 |

0 |

20 |

0 |

0 |

0 |

0 |

1 |

Pred. 3 |

0 |

0 |

19 |

1 |

0 |

1 |

0 |

Pred. 4 |

0 |

0 |

0 |

19 |

0 |

1 |

0 |

Pred. 5 |

0 |

0 |

0 |

0 |

20 |

0 |

0 |

Pred. 6 |

0 |

0 |

1 |

0 |

0 |

18 |

0 |

Pred. 7 |

0 |

0 |

0 |

0 |

0 |

0 |

19 |

Diagonal values in Table 2 show the true positives obtained after the classification. One can see the success of the classification task by looking roughly at this table. There are few samples misclassified. Only 1 or 2 samples were not recognized correctly.

From the confusion matrices given in Figure 5, we calculated accuracy, precision, sensitivity, and specificity of the classification of each gesture based on the formulation given in Equation 2 - Equation 5 respectively in Table 3.

Figure 5: 2×2 Confusion matrices with respect to each gesture class

Table 3: Metrics for NB classifier based on each gesture

Gesture No |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

Accuracy (%) |

100 |

99.17 |

97.52 |

98.34 |

100 |

97.52 |

99.28 |

Precision (%) |

100 |

95.24 |

90.48 |

95.00 |

100 |

94.74 |

100 |

Sensitivity (%) |

100 |

100 |

95.00 |

95.00 |

100 |

90.00 |

95.00 |

Specificity (%) |

100 |

99.01 |

98.02 |

99.01 |

100 |

99.01 |

100 |

3.1. Comparison with the state-of-the-art

The performance of the proposed ML-based hand gesture recognition system was also compared with the existing researches in the literature. Date of research, the dataset, the purpose, number of recognized gestures, the method, and the classification accuracy were considered for the comparison. Table 4 shows the summary of the state-of-the-art. LDA in the table stands for Linear Discriminant Analysis, and 3+3 C-RNN for three convolutional layers and three recurrent layers.

Table 4: Summary of state-of-the-art that predicts hand gestures with EMG data

Reference and Year |

Dataset |

Number of hand gestures |

ML Algorithm |

Accuracy (%) |

(Kim et al., 2018) – 2018 |

Private-Wearable Sensor |

9 |

GRU |

96.19 |

(Xie et al., 2018) – 2018 |

Public-Ninapro (N) |

17 |

3+3 C-RNN |

83.61 |

(Wahid et al., 2018) – 2018 |

Private-Myo Armband |

3 |

LDA |

94.54 |

(Simão et al., 2019a) – 2019 |

Public-UC2018 DualMyo (D) |

8 |

FFNN |

90.82 |

NinaPro DB5 (NP) |

8 |

GRU |

95.75 |

|

(Simão et al., 2019b) – 2019 |

Public-UC2018 DualMyo |

8 |

ANN trained in GAN |

95.5 |

(Jiang et al., 2020) – 2020 |

Private-Myo Armband |

10 |

LDA |

81.50 |

This study |

Public-UCI2019 Myo |

7 |

NB |

96.43 |

The highest accuracy belongs to our classification with 96.43% when compared with other EMG based gesture classification papers in the literature. In fact, our method is much more successful when specific gestures are taken into account.

Table 4 summarizes the recent studies about gesture recognition. All the studies are related to hand gesture recognition except (Kim et al., 2018) and (Jiang et al., 2020). Arm movements were recognized in (Kim et al., 2018) and American sign language digits were recognized in (Jiang et al., 2020) while the rest recognizes hand gestures such as hand at rest, fist, wrist flexion, wrist extension, etc. It is clearly seen from the table that the proposed method provides higher recognition accuracy with a very simple implementation. NB based method successfully discriminates hand gestures. It does not require designing and training a neural network, which is very time consuming, without compromising the accuracy.

4. Discussion

In the UCI machine learning repository, there are 4 different datasets related to EMG based gesture recognition. The most recent dataset which is donated in 2019 was preferred because it was obtained from more donors and consists of more number of instances when compared to other publicly available datasets. In addition, since this data set is a new one, it is needy for comprehensive researches. This dataset was analyzed only for the purpose of examining the latent factors limiting the performance of sEMG-Interfaces in (Kim et al., 2018). No classifications for hand gestures have been performed yet. These reasons led us to work on this dataset. Even though the samples in this dataset are more than the others, it would be better to have EMG signal form a variety of donors in different physical conditions such as age, sex, and diseases for a proper performance evaluation. Age, sex or some muscular diseases may have an effect on the recognition of hand gestures and must be investigated. We are planning to construct a new dataset with more donors in order to perform a better classification in the future. In addition, instead of classifying hand gestures in a supervised fashion, unsupervised methods will be investigated for the problem.

In this classification problem, we used 100 EMG sample data for each gesture class because of restricted computational capacity. But more powerful machines can be assigned to the classification task with a larger number of EMG samples. Therefore, the generalization ability of the classifier can be improved. Also, detection of different hand or finger gestures with lightweight classification methods can be studied.

5. Conclusion

In this study, different ML algorithms are applied to hand gesture recognition problem in order to predict seven hand gestures which are hand at rest, hand clenched in a fist, wrist flexion, wrist extension, radial deviation, ulnar deviations and extended palm. A publicly available dataset was used in the experiments to provide more research facilities to the researchers. 100 samples from each gesture class are used at the rate of 80% for training and 20% for test. Six ML methods consisting of ANN, SVM, k-NN, NB, DT and RF are examined according to accuracy, precision, sensitivity, specificity, classification error as a percentage, kappa value, RMSE and correlation. Among the classification methods, NB was determined to be the best model for the classification of hand gestures because of its high accuracy and low RMSE value. Also, it is a lightweight method. The experiments conducted on this study shows that hand gestures can be identified approximately perfect by machine learning approaches without heavy computational load. This achievement may facilitate many different real time problems such as identification of hand gestures of traffic policemen, applications related to the identification of hand gestures of deaf people, some IoT applications like the control of lightening or other functions by hand gestures in smart homes and smart vehicles, etc. These subtopics are one of the most studied topics nowadays. Therefore, accurate detection of hand gestures which was achieved in this paper will serve science of today.

The biggest challenge in ML based hand gesture recognition is that although the number of instances in the EMG dataset are not few, the number of the donors are very few. The datasets are composed of the EMG signals of very few people but many repetitions of certain movements were recorded. The success of a classifier can be properly evaluated with a variety of many EMG signals of previously unseen donors. In the future, we will extend the methodology of the present study with a larger dataset considering the different age and sex groups. And also, the effect of muscle disorders on EMG based gesture recognition will be investigated.

Conflict of Interest Statement

On behalf of all authors, the corresponding author states that there is no conflict of interest.

6. References

Acharya, U. R., Dua, S., Du, X., Sree S, V., and Chua, C. K., (2011). Automated diagnosis of glaucoma using texture and higher order spectra features. IEEE Transactions on Information Technology in Biomedicine, 15(3):449–455. ISSN 10897771. doi:10.1109/TITB.2011.2119322.

Bian, F., Li, R., and Liang, P., (2017). SVM based simultaneous hand movements classification using sEMG signals. In 2017 IEEE International Conference on Mechatronics and Automation, ICMA 2017, pages 427–432. Institute of Electrical and Electronics Engineers Inc. ISBN 9781509067572. doi:10.1109/ICMA. 2017.8015855.

Donovan, I., Valenzuela, K., Ortiz, A., Dusheyko, S., Jiang, H., Okada, K., and Zhang, X., (2017). MyoHMI: A low-cost and flexible platform for developing real-time human machine interface for myoelectric controlled applications. In 2016 IEEE International Conference on Systems, Man, and Cybernetics, SMC 2016 - Conference Proceedings, pages 4495–4500. Institute of Electrical and Electronics Engineers Inc. ISBN 9781509018970. doi:10.1109/SMC.2016.7844940.

Englehart, K., Hudgins, B., and Parker, P. A., (2001). A wavelet-based continuous classification scheme for multifunction myoelectric control. IEEE Transactions on Biomedical Engineering, 48(3):302–311. ISSN 00189294. doi:10.1109/10.914793.

Eshitha, K. V. and Jose, S., (2018). Hand Gesture Recognition Using Artificial Neural Network. In 2018 International Conference on Circuits and Systems in Digital Enterprise Technology, ICCSDET 2018. Institute of Electrical and Electronics Engineers Inc. ISBN 9781538605769. doi:10.1109/ICCSDET.2018.8821076.

Faust, O. and Bairy, M. G., (2012). Nonlinear analysis of physiological signals: A review. Journal of Mechanics in Medicine and Biology, 12(4). ISSN 02195194. doi:10.1142/S0219519412400155.

Faust, O., Hagiwara, Y., Hong, T. J., Lih, O. S., and Acharya, U. R., (2018). Deep learning for healthcare applications based on physiological signals: A review. Computer Methods and Programs in Biomedicine, 161:1–13. ISSN 18727565. doi:10.1016/j.cmpb.2018.04.005.

Guo, W., Sheng, X., Liu, H., and Zhu, X., (2017). Toward an Enhanced Human-Machine Interface for Upper-Limb Prosthesis Control with Combined EMG and NIRS Signals. IEEE Transactions on Human-Machine Systems, 47(4):564–575. ISSN 21682291. doi:10.1109/THMS.2016.2641389.

Jayalakshmi, T. and Santhakumaran, A., (2011). Statistical Normalization and Back Propagationfor Classification. International Journal of Computer Theory and Engineering, 3(1):89–93. ISSN 17938201. doi:10.7763/ ijcte.2011.v3.288.

Jiang, S., Gao, Q., Liu, H., and Shull, P. B., (2020). A novel, co-located EMG-FMG-sensing wearable armband for hand gesture recognition. Sensors and Actuators, A: Physical, 301. ISSN 09244247. doi:10.1016/j.sna. 2019.111738.

Kim, J. H., Hong, G. S., Kim, B. G., and Dogra, D. P., (2018). deepGesture: Deep learning-based gesture recognition scheme using motion sensors. Displays, 55:38–45. ISSN 01419382. doi:10.1016/j.displa.2018. 08.001.

Liang, H., Yuan, J., Thalmann, D., and Nadia, M. T., (2015). AR in hand: Egocentric palm pose tracking and gesture recognition for augmented reality applications. In MM 2015 - Proceedings of the 2015 ACM Multimedia Conference, pages 743–744. Association for Computing Machinery, Inc. ISBN 9781450334594. doi:10.1145/2733373.2807972.

Mi, J., Sun, Y., Wang, Y., Deng, Z., Li, L., Zhang, J., and Xie, G., (2016). Gesture recognition based teleoperation framework of robotic fish. In 2016 IEEE International Conference on Robotics and Biomimetics, ROBIO 2016, pages 137–142. Institute of Electrical and Electronics Engineers Inc. ISBN 9781509043644. doi: 10.1109/ROBIO.2016.7866311.

Molchanov, P., Gupta, S., Kim, K., and Pulli, K., (2015). Multi-sensor system for driver’s hand-gesture recognition. In 2015 11th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition, FG 2015. Institute of Electrical and Electronics Engineers Inc. ISBN 9781479960262. doi:10.1109/FG. 2015.7163132.

Sagayam, K. M. and Hemanth, D. J., (2017). Hand posture and gesture recognition techniques for virtual reality applications: a survey. Virtual Reality, 21(2):91–107. ISSN 14349957. doi:10.1007/s10055-016-0301-0.

Simão, M., Neto, P., and Gibaru, O., (2019a). EMG-based online classification of gestures with recurrent neural networks. Pattern Recognition Letters, 128:45–51. ISSN 01678655. doi:10.1016/j.patrec.2019.07.021.

Simão, M., Neto, P., and Gibaru, O., (2019b). Improving novelty detection with generative adversarial networks on hand gesture data. Neurocomputing, 358:437–445. ISSN 18728286. doi:10.1016/j.neucom.2019.05.064.

Singh, D. and Singh, B., (2019). Investigating the impact of data normalization on classification performance. Applied Soft Computing Journal. ISSN 15684946. doi:10.1016/j.asoc.2019.105524.

Vaiman, M., (2007). Standardization of surface electromyography utilized to evaluate patients with dysphagia. Head and Face Medicine, 3(1). ISSN 1746160X. doi:10.1186/1746-160X-3-26.

Van Drongelen, W., (2018). Signal Processing for Neuroscientists. Academic Press, 2 edition. ISBN 9780128104835.

Wahid, M. F., Tafreshi, R., Al-Sowaidi, M., and Langari, R., 2018. Subject-independent hand gesture recognition using normalization and machine learning algorithms. Journal of Computational Science, 27:69–76. ISSN 18777503. doi:10.1016/j.jocs.2018.04.019.

Wei, W., Wong, Y., Du, Y., Hu, Y., Kankanhalli, M., and Geng, W., (2019). A multi-stream convolutional neural network for sEMG-based gesture recognition in muscle-computer interface. Pattern Recognition Letters, 119:131–138. ISSN 01678655. doi:10.1016/j.patrec.2017.12.005.

Xie, B., Li, B., and Harland, A., (2018). Movement and Gesture Recognition Using Deep Learning and Wearable-sensor Technology. In ACM International Conference Proceeding Series, pages 26–31. Association for Computing Machinery. ISBN 9781450365246. doi:10.1145/3268866.3268890.

Zhang, X., Chen, X., Li, Y., Lantz, V., Wang, K., and Yang, J., (2011). A Framework for Hand Gesture Recognition Based on Accelerometer and EMG Sensors. IEEE Transactions on Systems, Man, and Cybernetics- Part A: Systems and Humans, 41(6):1064–1076. doi:10.1109/TSMCA.2011.2116004.