ADCAIJ: Advances in Distributed Computing and Artificial Intelligence Journal

Regular Issue, Vol. 12 N. 1 (2023), e31704

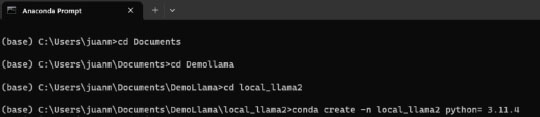

eISSN: 2255-2863

DOI: https://doi.org/10.14201/adcaij.31704

Generative Artificial Intelligence: Fundamentals

Juan M. Corchadoa, Sebastian López Fa, Juan M. Núñez Va, Raul Garcia S.a, and Pablo Chamosoa

a BISITE Research Group, University of Salamanca, Edificio Multiusos I+D+I, Salamanca, 37007

corchado@usal.es, sebastianlopezflorez@usal.es, jmnunez@usal.es, raulrufy@usal.es, chamoso@usal.es

ABSTRACT

Generative language models have witnessed substantial traction, notably with the introduction of refined models aimed at more coherent user-AI interactions—principally conversational models. The epitome of this public attention has arguably been the refinement of the GPT-3 model into ChatGPT and its subsequent integration with auxiliary capabilities such as search features in Microsoft Bing. Despite voluminous prior research devoted to its developmental trajectory, the model’s performance, and applicability to a myriad of quotidian tasks remained nebulous and task specific. In terms of technological implementation, the advent of models such as LLMv2 and ChatGPT-4 has elevated the discourse beyond mere textual coherence to nuanced contextual understanding and real-world task completion. Concurrently, emerging architectures that focus on interpreting latent spaces have offered more granular control over text generation, thereby amplifying the model’s applicability across various verticals. Within the purview of cyber defense, especially in the Swiss operational ecosystem, these models pose both unprecedented opportunities and challenges. Their capabilities in data analytics, intrusion detection, and even misinformation combatting is laudable; yet the ethical and security implications concerning data privacy, surveillance, and potential misuse warrant judicious scrutiny.

KEYWORDS

large language models; artificial intelligence; transformers; GPT

1. Introduction

Artificial intelligence has become one of the driving forces of 21st century economics, especially because of the development of generative artificial intelligence. This article explains what generative artificial intelligence is and what its foundations are, paying special attention to large language models. A short historical review analyses how AI has evolved to date and then presents the most relevant models and techniques. The limitations and challenges of the techniques that have been used thus far are analyzed and it is explained how generative artificial intelligence offers new and better alternatives for problem solving. The ethical and social aspects linked to the use of these technologies are also of great relevance and are explored throughout this paper. Finally, future trends are presented as well as the authors’ vision of the potential of this technology to change the world.

2. Definition and Fundamentals

This section describes generative artificial intelligence (AI), emphasizing its most significant and differentiating elements. This is followed by a brief review of the evolution of artificial intelligence and how this has led to the emergence of generative AI as we know it today. Finally, a summary of the progress of AI up to where we are today is presented.

2.1. What Is Generative AI?

Generative AI is defined as a branch of artificial intelligence capable of generating novel content, as opposed to simply analyzing or acting on existing data, as expert systems do (Vaswani et al., 2017). This is a real evolution over the intelligent systems used to date that are, for instance, based on neural networks, case-based reasoning systems, genetic algorithms, fuzzy logic (Nguyen et al., 2013) or hybrid AI models (Gala et al., 2016; Abraham et al., 2009; Corchado & Aiken, 2002; Corchado et al., 2021) and models and algorithms that used specific data for specific problems and generated a specific answer on the basis of the input data.

Generative artificial intelligence incorporates discriminative or transformative models trained on a corpus or dataset, capable of mapping input information into a high-dimensional latent space. In addition, it has a generative model that drives stochastic behavior, creating novel content at every attempt, even with the same input stimuli. These models can perform unsupervised, semi-supervised or supervised learning, depending on the specific methodology. Although this paper aims to present the full potential of generative AI, the focus is on large language models (LLMs) to generalize from there (Chang et al., 2023). LLMs are a subcategory of generative artificial intelligence (AI). Generative AI refers to models and techniques that have the ability to generate new and original content, and within this domain, LLMs specialize in generating text. An LLM such as OpenAI’s GPT (Generative Pre-trained Transformer) is basically trained to generate text, or rather to answer questions with paragraphs of text (Guan et al., 2020). Once trained, it can generate complete sentences and paragraphs that are coherent and, in many cases, indistinguishable from those written by humans, simply from an initial stimulus or prompt (Madotto et al., 2021).

While generative AI also encompasses models that can generate other types of content such as images (e.g., DALL-E, also from OpenAI) or music, LLMs focus specifically on the domain of language (Adams, et al., 2023). LLMs can therefore be considered as a part or subset of the broad category of generative AI.

LLMs are neural networks designed to process sequential data (Bubeck et al., 2023). An LLM can be trained on a corpus of text (digitized books, databases, information from the internet, etc.); the input text can be used to learn to generate text, word-by-word in a sequence, given previous information. Transformers are perhaps the most widely used models in the construction of these LLMs (Von Oswald et al., 2023). Large Scale Language Models (LLMs) do not exclusively use transformers, although transformers, in particular the architecture introduced in the paper “Attention Is All You Need” by Vaswani et al. in 2017, have proven to be especially effective for natural language processing tasks (Nadkarni et al., 2011) and have been the basis of many popular LLMs such as GPT and BERT. However, before the popularization of transformers, recurrent neural networks (RNNs) and their variants, such as LSTM (Long Short-Term Memory) and GRU (Gated Recurrent Units) networks, were commonly used to model sequences in natural language processing tasks (Sherstinsky, 2020; Tang et al., 2020).

As research in the field of artificial intelligence and natural language processing continues to advance, it is possible that new architectures and approaches emerge which may be used in conjunction with or instead of transformers in future LLMs. Thus, although transformers are currently a dominant architecture for LLMs, they are not the only architecture used, but they are one of the most reliable when it comes to generating new text that is grammatically correct and semantically meaningful (Vaswani et al., 2017). This is due to three specific elements: (a) the first is the use of positional coding mechanisms, which allow the network to assign a position to a word within a sentence so that this position is part of the network’s input data. This means that the word order information becomes part of the data itself rather than part of the structure of the network, so that as the network is trained, with lots of textual data, it learns how to interpret positional coding and to order words coherently from the data used in the training; (b) secondly, attention (Bahdanav et al., 2014), which emerged as a mechanism for the meaningful translation of text from one language to another by developing algorithms to relate words to each other and thus know how to use them in an adequate context; (c) finally, self-attention or autoregressive attention, allows for better knowledge of language features, in addition to gender and order, such as synonyms, which are identified through the analysis of multiple examples.

The same is true for verb conjugations, adjectives, etc. Previous approaches that assign importance based on word frequencies can misrepresent the true semantic importance of a word; in contrast, self-attention allows models to capture long-term semantic relationships within an input text, even when that text is split and processed in parallel (Vaswani et al., 2017). Text generation is also about creating content and sequences of, for example, proteins, audio, computer code or chess moves (Eloundou et al., 2023).

Advances at the algorithm level in the development of transformers, for example, together with the current computational capacity and the ability to pre-train with unlabelled data and to refine training (fine tuning) have driven this great AI revolution. Model performance depends heavily on the scale of computation, which includes the amount of computational power used for training, the number of model parameters and the size of the dataset. Pre-training an LLM requires hundreds or thousands of GPUs and weeks to months of dedicated training time. For example, it is estimated that a single training run for a GPT-3 model with 175 billion parameters, trained on 300 billion tokens, can cost five million dollars in computational costs alone.

LLMs can be pre-trained on large amounts of unlabeled data. For example, GPT is trained on unlabeled text data, which allows it to learn patterns in human language without explicit guidance (Radford and Narasimhan, 2018). Since unlabeled data is much more prevalent than labeled data, this allows LLMs to learn about natural language in a much larger training corpus (Brown et al., 2020). The resulting model can be used in multiple applications because its training is not specific to a particular set of tasks.

General-purpose LLMs can be “fine-tuned” to generate output that matches the priors of any specific configuration (Ouyang et al., 2022; Liu et al., 2023), known as fine tuning. For example, an LLM may generate several potential answers to a given query, but some of them may be incorrect or biased. To fine-tune this model, human experts can rank the outputs to train a reward function that prioritizes some answers over others. Such refinements can significantly improve the quality of the model, making a general-purpose model fit to solve a particular problem (Ouyang et al., 2022).

2.2. History and Evolution from AI to Generative AI

Artificial intelligence is a field of computer science and technology concerned with the development of computer systems that can perform tasks that typically require human intelligence, such as learning, decision-making, problem solving, perception and natural language (Russell and Norvig, 2014). Turing addressed the central question of artificial intelligence: “Can machines think” (Turing, 1950). Soon after, it was John McCarthy who coined the term “artificial intelligence” in 1956 and contributed to the development of the Lisp programming language, which for many has been the gateway to AI (McCarthy et al., 2006). He, along with others such as Marvin Minsky (MIT), Lotfali A. Zadeh (University of Berkeley, California) or John Holland (University of Michigan), have been the pioneers (Zadeh, 2008). Trends, models, and algorithms have emerged from their work. Their work has led to the creation of schools of thought and systems have been built on its basis, bringing about real advances in fields such as medicine.

Thus, branches of artificial intelligence such as symbolic logic, expert systems, neural networks (Corchado et al., 2000), fuzzy logic, natural language processing, genetic algorithms, computer vision, multi-agent systems (González-Briones et al., 2018) or social machines (Hendler & Mulvehill, 2016; Chamoso et al., 2019) have emerged. All these branches are divided into sub-branches and these into others, such that, today, the level of specialization is high.

Most complex systems are affected by multiple elements; they generate, or are related to multiple data sources, they evolve over time, and in most cases, they contain a degree of expert knowledge (Pérez-Pons et al., 2023). In this regard, it seems clear that the combined use of symbolic systems capable of modelling knowledge together with connectionist techniques that analyze data at different levels or from different sources can offer global solutions. It is not difficult to find such problems, for example, in the field of medicine, where knowledge modelling is as important as the analysis of patient data alone. One example of model fusion was the Gene-CBR platform for genetic analysis. On the one hand, it used the methodological framework delivered with a case-based reasoning system together with several neural networks and fuzzy systems (Díaz et al., 2006; Hernandez-Nieves et al., 2021). This model was built to facilitate the analysis of myeloma.

The 1970s/80s was a breakthrough period for artificial intelligence and distributed computing (Janbi et al., 2022). A time of great change, with the Internet taking off, at a time when the world was approaching a new century and where the attention of the computing world was more focused on the potential of the Internet than on the advancement of AI. This fact, coupled with hardware limitations, industry disinterest in AI and a lack of disruptive ideas contributed to the beginning of a period of stagnation in the field, which is known as the “AI winter”.

But after a winter there is a summer and this came at the turn of the century, with the emergence of what we call deep learning and convolutional neural networks (CNNs). It was a major concept that brought about a radical change in the way we deal with information. These networks use machine learning techniques in a somewhat different way to how they were originally conceived (Bengio, 2009; Pérez-Pons et al., 2021; Hernández et al., 2021). Unlike other models, they have multiple hidden layers that allow features and patterns to be extracted from the input data in an increasingly complex and abstract manner (Parikh et al., 2022). Here, a single algorithm addresses a problem from different perspectives.

These models represent a before and after and are bound to revolutionize how we work. This is the beginning of the fifth industrial revolution thanks to our ability to create systems through the convergence of digital, physical, and biological technologies using these new models of knowledge creation (Corchado, 2023). If we lived in a fast-moving world, we must now prepare for a world of continuous acceleration. Those who keep pace with these advances will see their business, value generation and service opportunities increase exponentially in the coming years.

Deep learning is a subcategory of machine learning that focuses on algorithms inspired by the structure and function of the brain, called artificial neural networks (Chan, et al., 2016; Kothadiya et al., 2022, Alizadehsani et al., 2023). These networks, especially when they have many (deep) layers, have proven to be extremely effective in a variety of AI tasks. Deep learning-based generative models can automatically learn to represent data and generate new ones that resemble the distribution of the original data.

CNNs are a specialized class of neural networks designed to process data with a grid-like structure, such as an image. They are central to computer vision tasks. In the context of generative AI, CNNs have been adapted to generate images. For example, generative antagonistic networks (GANs) often use CNNs in their generators and discriminators to produce realistic images.

GANs, introduced by Ian Goodfellow and his collaborators in 2014, consist of two neural networks, a generator, and a discriminator, which are trained together (Goodfellow et al., 2014). The generator attempts to produce data (such as images), while the discriminator attempts to distinguish between real data and generated data. As training progresses, the generator gets better and better at creating data that deceives the discriminator. CNNs are often used in the GAN architecture for image-related tasks. On the other hand, variational autoencoders (VAEs), for example, are another type of generative model based on neural networks (Wei & Mahmood, 2020). Unlike GANs, VAEs explicitly model a probability distribution for the data and use variational inference techniques to train. In addition, pixel-based models (Su et al., 2021) are generative AI frameworks based on deep learning and generate images on a pixel-by-pixel basis, using recurrent neural networks or CNNs.

Deep learning, in particular, convolutional networks, have been fundamental tools in the development and success of many generative AI models, especially those focused on image generation. These techniques have enabled significant advances in the ability of models to generate content that is indistinguishable from real content in many cases.

For instance, ChatGPT has come into our lives and changed them, and we had hardly noticed. Some people have only heard of it, others have used it on occasions, and many of us are already working on projects and generating value with this technology. The ability of this tool to write text, to generate algorithms, to synthesize and generate reasoned proposals is extraordinary, but this is only the tip of the iceberg. It is already being used to create systems for customer service, medical data analysis, decision support and diagnostics, among others.

But ChatGPT is only the first such system to make its way into the market. There are many other models and tools: BARD, XLNet, T5, RoBERTa, Bedrock, Wu Dao, Nemo, LLAMA 2, etc. Technology such as this will allow for the development of much more accurate diagnostic systems based on evidence and clinical records, more widespread use of telemedicine, systems for monitoring chronic patients in their homes, etc. In this regard, algorithms of great interest for the medical field are being developed at different levels, such as transformers, autoencoders, deep energy-based generative models, variational inference models of prototypes, reinforcement learning systems with causal inference, to name but a few. AI has the potential to fundamentally change the way we live and work, but it also poses significant ethical challenges in terms of privacy and security that need to be addressed.

2.3. The Shift from Traditional AI to Generative AI

The history of artificial intelligence (AI) is rich and fascinating and, like everything else, it can have different interpretations and key elements. Here is a summary of some transcendental elements that allow us to analyze the evolution of this field quickly from the appearance of the first artificial neuron to the construction of the first transformer and the popularization of ChatGPT:

1. Artificial neuron (1943): Warren McCulloch and Walter Pitts published “A Logical Calculus of the Ideas Immanent in Nervous Activity”, where a simplified model of a biological neuron, known as the McCulloch-Pitts neuron, was presented. This model is considered the first artificial neuron and is the basis of artificial neural networks (McCulloch & Pitts, 1943).

2. Perceptron (1957-1958): Frank Rosenblatt introduced the perceptron, the simplest supervised learning algorithm for single-layer neural networks. Although limited in its capabilities (e.g., it could not solve the XOR problem), it laid the foundation for the future development of neural networks (Rosenblatt, 1958).

3. AI Winter (1970s-1980s): Limitations of early models and lack of computational capacity led to a decline in enthusiasm and funding for AI research. During this period, neural networks were not the focus of the AI community (Moor, 2006).

4. Backpropagation (1986): Rumelhart, Hinton and Williams introduced the backpropagation algorithm for training multilayer neural networks (Rumelhart et al., 1986). This algorithm began to revive interest in neural networks. Recurrent networks, which use backpropagation, pay attention to each word individually and sequentially. These networks operate sequentially. In these networks, the order in which each word appears is considered in the training. In the context of the recurrent networks that appeared in the late 1980s and early 1990s, RNNs were developed and created to process sequences of data. To train these networks, the backpropagation through time technique (BPTT) is used. RNNs can maintain a “state” over time, which makes them suitable for tasks such as time series prediction and natural language processing. However, traditional RNNs faced problems such as gradient vanishing and gradient explosion. Recurrent networks lose context as they progress through paragraph evaluation/generation, which is a problem if the text is long. This problem was solved by other networks with backpropagation, long short-term memory (LSTM), introduced by Hochreiter and Schmidhuber (1997), a specialized variant of RNNs designed to deal with the vanishing gradient problem. LSTMs can learn long-term dependencies and have been central to many advances in natural language processing and other sequential tasks until the advent of transformers. These networks include, at each stage of learning, mathematical operations that prevent it from forgetting what was learned at the beginning of the paragraph. However, these networks have other problems related to the impossibility of parallelizing their training, making the creation of large models practically unfeasible. In this type of network, all training is sequential.

5. Deep Learning and Convolutional Neural Networks (Convolutional Neural Networks, CNN, 2012): In 2012, Alex Krizhevsky, Ilya Sutskever and Geoffrey Hinton presented a convolutional neural network that won the ImageNet image classification challenge by a wide margin (Krizhevsky et al., 2012). This event marked the beginning of the “Deep Learning” era with renewed interest in neural networks that started to become popular in 2006, the year in which the end of the “AI Winter” began. These networks are particularly suitable for classification and image processing, are structured in layers and are organized into three main components: convolutional layers, activation layers and clustering layers. Convolutional layers are responsible for extracting important features from images by means of filters or kernels. The filters glide over the image, performing mathematical operations to detect specific edges, shapes, or patterns. In activation layers, activation functions (such as ReLU) are applied to add non-linearity and increase the network’s ability to learn complex relationships. Finally, the clustering layers reduce the size of the image representation, reducing the number of parameters and making the network more efficient in processing. As information passes through these layers, the CNN learns to recognize more abstract and complex features, allowing for the identification of objects, people, or anything else that needs to be identified. The work done in this field for the construction of massive information processing systems and for the development of parallel projects has given rise to the transformers that are used today (Gerón, 2022).

6. Transformers (2017): Vaswani et al. introduced the transformer architecture in the paper “Attention Is All You Need”. This architecture, based on attention mechanisms, proved to be highly effective for natural language processing tasks and became the basis for many subsequent models, including GPT. The advantage of these networks over backpropagation models such as LSTMs and deep learning lies in their ability to parallelize learning. Unlike recurrent neural networks (RNNs) or convolutional neural networks (CNNs), transformers do not rely on a fixed sequential or spatial structure of the data, which allows them to process information in parallel and capture long-term dependencies in the data. In this regard, the concept of word embedding, which is the basis of transformer learning, is worth mentioning. This is a technique within natural language processing for text vectorization. Transformers make it possible to analyze all the words in a text in parallel and, in this way, the processing and creation of the network is faster. That said, it should be noted that these networks require huge amounts of data and very powerful hardware, as mentioned above. For example, GPT-3 was created with 175 billion parameters and 45 TB of data, and GPT-4 with 1000,000,000,000,000 million parameters and a larger but unknown number of TB.

7. GPT and ChatGPT (2018-2020): OpenAI launched the generative pre-trained transformer (GPT) model series. GPT-2, released in 2019, demonstrated an impressive ability to generate coherent and realistic text. GPT-3, released in 2020, further extended these capabilities and led to the popularization of chat-based applications such as ChatGPT (Abdullah et al., 2022). This product has had impressive penetration power, having reached 100 million users in 2 months, when other platforms such as Instagram have taken 26 months to reach the same number of users (Facebook 54 months or Twitter 65 months).

These seven elements may be regarded as a chronological list of findings and facts reflecting the evolution of AI from its origins to the emergence of what is known today as generative AI.

3. Large Language Models

In this section, we introduce large language models (LLMs). After a generic definition, selected success stories are discussed (Itoh & Okada, 2023). Rather than conducting an exhaustive study, the intention is to highlight the LLMs that are currently most relevant and comment on their distinctive aspects.

3.1. Defining Large Language Models

Large Language Models are artificial intelligence models designed to process and generate natural language. These models are trained on vast amounts of text, enabling them to perform complex language-related tasks such as translation, text generation and question answering, among others.

LLMs have become popular largely due to advances in transformer architecture and the increase in available computational capacity. These models are characterized by many parameters, allowing them to capture and model the complexity of human language.

Large Language Models have revolutionized the field of natural language processing and have several distinctive features. These are the most characteristic elements of LLMs:

• Large number of parameters: LLMs, as the name implies, are large. For example, GPT-3, one of the best known LLMs, has 175 billion parameters. This huge number of parameters allows them to capture and model the complexity of human language.

• Large corpus training: LLMs are trained on vast datasets that span large portions of the internet, such as books, articles, and websites. This allows them to acquire a broad general knowledge of language and diverse topics.

• Text generation capability: LLMs can generate text that is coherent, fluent and, in many cases, indistinguishable from human-written text. They can write essays, answer questions, create poetry and more.

• Transfer learning: Once trained on a large corpus, LLMs can be “tuned” for specific tasks with a relatively small amount of task-specific data. This is known as “transfer learning” and is one of the reasons LLMs are so versatile.

• Use of transformer architecture: Most modern LLMs, such as GPT and BERT, are based on a transformer architecture, which uses attention mechanisms to capture relationships in data.

• Multimodal capability: While LLMs have traditionally focused on text, more recent models are exploring multimodal capabilities, meaning they can understand and generate multiple types of data such as text and images simultaneously.

• Generalization across tasks: Without the need for specific architectural changes, an LLM can perform a wide variety of tasks, from translation to text generation to question answering. Often, all that is needed is to provide the model with the right prompt or stimulus.

• Ethical challenges and bias: Because LLMs are trained on internet data, they can acquire and perpetuate biases present in that data. This has led to concerns and discussions about the ethical use of these models and the need to address and mitigate these biases.

Similarly, the growth of different LLM models is exponential in time, with each LLM developer working on a wide variety of applications to meet different needs and resource levels. This includes both larger models with many parameters and smaller models with fewer parameters. Companies such as OpenAI and Google have been developing models with an ever-increasing number of parameters, where these models are able to tackle very diverse and complex tasks and often perform outstandingly well in a wide range of applications. However, the case of the META company with its Llama 2 model has created commotion due to the different parameterized versions of the model and is being optimized to be able to run in low hardware performance environments. The following Table 1 shows data regarding some of these models:

Table 1. LLM models.

Model Name |

Company |

Number of Parameters |

Training Information Quantity |

Website |

GPT-3 |

OpenAI |

175 billion |

Approx. 570GB (WebText, books, others) |

|

BERT-Large |

340 million |

Wikipedia + BookCorpus |

||

T5 (Text-to-Text Transfer Transformer) |

Google AI |

Varies depending on version (from 60 million to 11 billion) |

C4 (Common Crawl) |

https://ai.googleblog.com/2020/02/exploring-transfer-learning-with-t5.html |

RoBERTa |

Facebook AI |

Varies depending on version (up to 355 million for RoBERTa-Large) |

Numerous datasets including WebText, OpenWebText, and others |

https://ai.meta.com/blog/roberta-an-optimized-method-for-pretraining-self-supervised-nlp-systems/ |

XLNet |

Google/CMU |

Up to 340 million |

Various datasets including Wikipedia and BookCorpus |

|

CLIP |

OpenAI |

281 million |

Internet images + associated text |

|

DALL·E |

OpenAI |

Approx. 12 billion (based on GPT-3) |

Images and text descriptions |

|

Llama 2 |

Meta AI |

1000 million |

1000 million words |

|

Wu Dao |

Beijing Academy of Artificial Intelligence (BAAI) |

1.75 trillion |

4.9 terabytes of text and code |

|

LaMDA |

Google AI |

137 billion |

Google databases |

|

PaLM |

Google AI |

540 billion |

Google databases |

3.2. Types of Large Language Models

What follows are some of the types of LLMs, and an identification of their key characteristics and potential:

1. Autoregressive models:

• GPT (Generative Pre-Trained Transformer): Developed by OpenAI, GPT is an autoregressive model that generates text on a word-by-word basis. It has had several versions, with GPT-3 being the most recent and advanced at the time of the last update in 2021.

2. Bidirectional model classification:

• BERT (Bidirectional Encoder Representations from Transformers): Developed by Google, BERT is a model that is trained bidirectionally, meaning that it considers context on both the left and right sides of a word in a sentence. It is especially useful for reading comprehension and text classification tasks.

3. Sequence-to-sequence models:

• T5 (Text-to-Text Transfer Transformer): Developed by Google, T5 interprets all language processing tasks as a text-to-text conversion problem. For example, “translation”, “summarization” and “question answering” are handled as transformations from text input to text output.

• BART (Bidirectional and Auto-Regressive Transformers): Developed by Facebook AI, BART combines features of BERT and GPT for generation and comprehension tasks.

4. Multimodal models:

• CLIP (Contrastive Language–Image Pre-training) and DALL·E: Both developed by OpenAI, these models combine computer vision and natural language processing. While CLIP is able to understand images in the context of natural language, DALL-E generates images from textual descriptions.

• WU DAO is a deep learning language model created by the Beijing Academy of Artificial Intelligence that has multimodality features. It has been trained on both text and image data, so it can tackle both tasks. It was trained with many parameters (1.75 trillion).

4. Algorithmics Relevant in Field of Generative AI

Generative artificial intelligence is mainly based on unsupervised learning techniques. This differs from supervised learning models that need labelled data to orchestrate their training phase. The absence of such labelling constraints in unsupervised learning models, such as generative adversarial networks (GANs) or variational autoencoders (VAEs), allows for the use of larger and more heterogeneous datasets, resulting in simulations that closely mimic real-world scenarios (Goodfellow et al., 2016). The main goal of these generative models is to decipher the intrinsic probability distribution P(x) to which the dataset adheres. Once the model is competently trained, it possesses the ability to generate new samples of data ‘x’ that are statistically consistent with the original dataset. These synthesized samples are drawn from the learned distribution, thus extending the applicability of generative models in various sectors such as healthcare, finance, and creative industries (Baidoo-Anu & Owusu Ansah, 2023).

The landscape of generative AI is notably dominated by two key architectures: generative adversarial networks (GANs) and generative pre-trained transformers (GPTs). GANs operate through dual neural networks, consisting of a generator and a discriminator. The generator produces synthetic data, while the discriminator evaluates the authenticity of this data. This adversarial mechanism continues iteratively until the discriminator can no longer distinguish between real and synthetic assets, thus validating the generated content (Hu, 2022; Jovanović, 2022). GANs are mainly used for applications in graphics, speech generation and video synthesis (Hu, 2022).

There are multifaceted contributions from various architectures such as GANs, GPT models and especially variational autoencoders (VAE). The latter not only offer a probabilistic view of generative modelling, but also allow for a more flexible understanding of the underlying complex data distributions (Kingma and Welling, 2013). In addition, the advent of multimodal systems, which harmonize diverse data types in a singular architecture, has redefined the capacity for intricate pattern recognition and data synthesis. This evolution reflects the increasing complexity and nuances that generative AI can capture.

The interaction between VAEs and multimodal systems exemplifies the next frontier of generative AI. It promises not only greater accuracy, but also the ability to generate results that are rich in context and aware of variations between different types of data. In this context, generative AI has evolved from a mere data-generating tool to a progressively interdisciplinary platform capable of understanding nuances and solving complex problems in various industries (Zoran, 2021).

4.1. Stochastic Latent Actor-Critic: Deep Reinforcement Learning with a Latent Variable Model

Deep reinforcement learning (RL) algorithms leverage high-capacity networks to directly learn from image observations. However, these high-dimensional observation spaces present challenges as the policy must now solve two problems: representation learning and task learning.

This paper proposes the Stochastic Latent Actor-Critic (SLAC) algorithm, a high-performance, high-efficiency RL method for learning policies for complex continuous control tasks directly from high-dimensional image inputs. SLAC specifically addresses the challenges posed by large observation spaces through the introduction of a stochastic latent state that summarizes the relevant information from each situation for decision making.

In this way, the SLAC actor-critic divides the general problem of learning the state representation and determining the most appropriate action into two specialized processes. On the one hand, the encoder module is responsible for compressing the observation information into a latent representation useful for the task. On the other hand, the traditional actor-critic uses this latent state to evaluate situations and select the best action, without needing to directly process the complex original image input.

SLAC proposes an innovative and robust technique to integrate stochastic sequential models with RL into a single unified framework. This is accomplished by creating a succinct latent representation, which is subsequently used to conduct RL within the latent space generated by the model. Experimental trials indicate that this approach exceeds the performance of both model-free and model-based competitors in terms of final results and the efficient use of samples, especially across a spectrum of complex image-based control tasks.

In the context of generative environment models for RL, these findings emphasize the importance of latent representation learning that can accelerate reinforcement learning from images, representing a significant advance in the domain of machine learning and generative artificial intelligence.

In traditional deep reinforcement learning (DRL) paradigms, such as q-learning or policy gradients, the objective function is often adapted to maximize the expected performance J(θ) defined as:

Where τ represents a trajectory and γ is the discount factor.

In the context of DRL with a latent variable model, the incorporation of a latent space z adds an additional layer of abstraction. Specifically, state s is replaced or augmented by a latent variable z, which in turn can be a function of the state z = f(s) or can be learned from the unsupervised data.

Where ϕ are the parameters of the latent variable model and the policy π is now conditioned not only on S but also on z.

The success of this approach lies in the quality of the learned latent space and how well it captures the nuances needed for optimal decision making. It is a promising line of research, and the advances here could potentially revolutionize the way we approach complex, high-dimensional, partially observable decision-making problems in DRL.

4.2. Video: High-Definition Video Generation with Diffusion Models

The sequential composition of spatial and temporal super-resolution video models exhibits an ingenious architecture, as it not only elevates pixel-level fidelity, but also ensures temporal coherence between the generated frames (Simonyan & Zisserman, 2014; Xie et al., 2018). This facet is particularly beneficial for applications that require dynamic scene rendering and fluid motion rendering; conventional image-based generative models do not meet those conditions (Jiang et al., 2018). Furthermore, the system’s ability to scale towards text-to-high-definition video output, made possible by its fully convolutional architecture, represents a significant advance over static image generation. In traditional generative models, the computational complexity for video generation often scales poorly, making high-definition results computationally infeasible (Vondrick et al., 2016). In contrast, the modular image-video cascade structure facilitates more efficient resource allocation, enabling high-quality video sampling in substantially shorter periods of time (Tulyakov et al., 2018).

The integration of diffusion models into the existing image-video architecture generates a compelling fusion of technologies that can serve to augment its generative capabilities. By introducing the temporal component with a range of 0 to 1, the diffusion model imparts a temporally consistent level of stochasticity and granularity to the generated videos, enriching the high-level representations of VAE (Kingma & Welling, 2013; Ho et al., 2020).

This hybrid model can be conceptually visualized as operating in two stages: first, the VAE Encoder(x) encoder function computes a latent variable z from the input x. This z subsequently serves as the initial condition for the diffusion model, essentially fulfilling the role of $x$ in its equations. Next, a time-dependent denoising function Dt(zt), is introduced, which refines the high-level representations of the VAE over time. It culminates in a synthesis function xθ (z,t)=Decoder(z) + Dt(zt), thus orchestrating a richer, temporally smoothed output.

The objective function L(x) for the diffusion model then becomes:

Where .

In this way, the diffusion model learns to adjust the high-level representations generated by the VAE over time, thus providing a richer, temporally smoothed generative model that takes advantage of the strengths of both architectures.

4.3. Motion Diffuse: Text-Driven Human Motion Generation with Diffusion Model

In the MotionDiffuse architecture for text-based human motion generation, special attention should be paid to the design of loss functions that align with the attention structure embedded in the model. Given that Motion Diffuse employs attention mechanisms to modulate the relationship between text and motion spaces, two prominent loss functions could be of critical importance.

Incorporating specialized loss functions such as those in the GLIDE model could further refine the MotionDiffuse framework, especially with its unique approach to text-based human motion generation. In MotionDiffuse, the incorporation of a stochastic noise term, guided by the equation , offers an interesting avenue for loss function innovation.

In the original MotionDiffuse methodology, the model focuses on predicting a noise term instead of xt-1 in line with the GLIDE framework (Nichol et al., 2021). This is captured by a mean squared error (MSE) loss function:

Consider the integration of the following specialized loss functions:

Loss of Attentional Alignment Between Modalities

This loss function ensures that the attention distributions between text and motion spaces are aligned. Since the attention scores at and am at meter are derived from the text and motion encoders respectively, the loss can be defined as the Kullback-Leibler divergence between the two:

This loss encourages the model to pay attention to semantically similar regions in both the text and motion domains, thus encouraging better alignment between modalities.

Text-Guided Motion Fidelity Loss

To ensure that the generated motion is not just any motion but one that specifically aligns with the text description, a text-guided motion fidelity loss can be introduced. This would measure the consistency between the high-level features extracted from the generated motion and those dictated by the text. Let Ft and Fm be the high-level text and motion features:

Coupling these loss functions with traditional generative losses such as mean square error (MSE) or generative adverse loss ensures that Motion Diffuse generates motions that are not only qualitatively good, but also well aligned with textual descriptions. This would address some of the challenges in generating motions that are diverse and textually consistent.

By intricately linking these loss functions with the attentional mechanisms within the model, MotionDiffuse can facilitate more nuanced, temporally coherent, and contextually relevant motion sequences guided by textual descriptions.

By merging these specialized loss functions into a unified loss term, we are able to write:

Here, λ1, λ2, λ3 are the hyperparameters that control the balance between the different loss components.

4.4. The Emergence of Diffusion Models and Latent Space Dynamics in Text, Audio, and Video Generation

This is a recent innovation in the field of probabilistic denoising diffusion denoising models (DDPMs). Unlike conventional DDPMs, LDMs operate in latent space and are conditional on textual representations (Rombach et al., 2022). This allows for a step-by-step granular generation process involving several conditional diffusion steps.

The loss function is meticulously defined as the mean square error in the noise space (ξ∽N(0, I)\). Mathematically, this can be formulated as:

Where α is a small positive constant and ξθ represents the denoising network. This loss function allows for efficient training by optimizing a random term t with stochastic gradient descent. It is worth noting that this approach allows for the diffusion model to be efficiently trained by optimizing the evidence lower bound (ELBO) without requiring adversarial feedback, resulting in remarkably faithful reconstructions that match the ground truth distribution (Huang et al., 2023).

In 2023, the generative artificial intelligence landscape is undergoing a seismic shift, marked predominantly by the maturation of diffusion models. In the text domain, while transformers were once lauded for their versatility in natural language understanding and generation, diffusion models are now catalyzing a new wave of innovation, offering nuanced language models with more robust generalization capabilities. They are extending the fundamental work of earlier models, adding layers of complexity and applicability in sentiment analysis, abstract summarization, and more (Nichol et al., 2021).

In the audio sector, models such as Make-An-Audio are revolutionizing text-to-audio (T2A) generation using enhanced broadcast techniques (Zhao et al., 2023). They address the inherent complexity of long, continuous signal data, something that previous methods such as WaveGAN and MelGAN struggled with. This results in higher fidelity audio generation and nuanced semantic understanding, a radical shift from previous methods.

Video generation has also been strengthened by high-definition broadcast models, moving beyond the resolution limitations, which often plagued older GAN-based methods. Here, the focus is not only on pixel-level detail, but also on semantic coherence between video frames, thus achieving a new standard in the realism of the generated content (Rombach et al., 2022).

In cross-modal generative learning, the advent of stochastic latent actor-critic models integrated with diffusion models offers the possibility of seamless and more accurate translation between different data types. These hybrid models are beginning to unlock capabilities for high-definition, high-fidelity generation in text, audio, and video modalities (Haarnoja et al., 2018).

Taken together, these advances in text, audio, and video broadcast models are setting the stage for a future of multimodal generative AI that is richer, more dynamic, and more far-reaching in its applications, thus revolutionizing the broader landscape of data analytics, content creation, and automated decision making.

5. Chat GPT, Its Potential and How to Take Advantage of It

5.1. Description of GPT

Generative pre-trained transformer (GPT) models represent a significant innovation in the field of natural language processing (NLP) and artificial intelligence (AI). Developed by OpenAI, GPT models are based on the transformer architecture, which was introduced by Vaswani et al. (2017).

GPT models are pre-trained on large corpora of text and then tuned on specific tasks, allowing them to generate text that is consistent, grammatically correct, and often indistinguishable from human-generated text (Radford et al., 2019).

GPT models use multiple layers of attention and a combination of multi-head attention, allowing them to capture a variety of features and relationships in data. The ability of GPT models to understand and generate text has led to advances in a variety of applications, including machine translation, creative text generation, and question answering (Brown et al., 2020).

5.1.1. Architecture and Attention

The transformer architecture, introduced by Vaswani et al. (2017), has revolutionized the field of natural language processing and represents a paradigm shift in the way sequences are modeled.

Unlike traditional recurrent architectures (Bahdanau, Cho, & Bengio, 2014), transformers eliminate recurrence and instead use attention mechanisms to capture dependencies in the data.

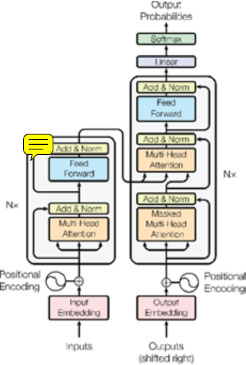

The architecture consists of two main parts: the encoder and the decoder; both are composed of a stack of identical layers containing two main sublayers: a multi-head attention sublayer and a feed-forward network.

The multi-head attention allows the model to simultaneously attend to different parts of the input, thus capturing complex and long-term relationships. The forward feed-forward network consists of a simple linear transform followed by a nonlinear activation function.

Transformer

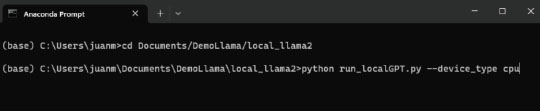

A key feature of the transformer architecture is the addition of residual connections around each of the sublayers (He, Zhang, Ren, & Sun, 2016), followed by layer normalization (Ba, Kiros, & Hinton, 2016). This facilitates training and allows gradients to flow more easily through the network. The combination of attention, residual connections, and layer normalization allows transformers to be highly parallelizable and computationally efficient, which has led to their widespread adoption in a variety of NLP tasks (Devlin, Chang, Lee, & Toutanova, 2018), see Figure 1.

Figure 1. Transformer - model architecture (Vaswani et al. 2017).

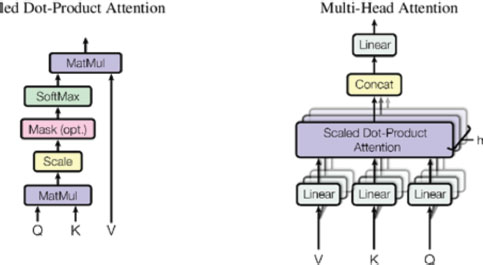

Attention is a central concept in the transformer architecture. It allows the model to weigh different parts of the input when generating each word of the output, which facilitates deep contextual understanding. Attention can be described in three main components:

Queries, keys, and values: Attention is computed using queries, keys, and values, which are vector representations of the words in the input. Queries and keys determine the attention weighting, while values are weighted according to these weights to produce the output (Vaswani et al. 2017).

Multi-head attention: Multi-head attention allows the model to pay attention to different parts of the input simultaneously. Each “head” of attention can focus on different relations in the text, and the outputs from all the heads are combined to form the final representation.

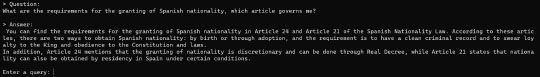

Autoregressive Attention: In the case of GPT, autoregressive attention is used, where each word can only pay attention to the previous words in the sequence. This ensures that text generation is performed in a causal and coherent manner (Radford et al., 2018; Brown et al., 2020), see Figure 2.

Figure 2. (left) Attention to scale product point. (right) multi-head attention consists of several layers of attention running in parallel (Vaswani et al. 2017).

Layers and Feed-Forward Network

The transformer architecture consists of a series of layers, each with a multi-head attention layer followed by a densely connected feed-forward network. Residual connections and layer normalization are applied at each stage to facilitate training and improve stability (He et al., 2016; Ba et al., 2016).

5.1.2. Pre-Training and Fine-Tuning

Pre-training and fine-tuning of the OpenAI GPT model are two crucial phases in the process of developing and adapting transformer-based language models. In particular, GPT is a leading paradigm in natural language processing research and represents a significant advance in the generation of coherent and contextually relevant text.

GPT Pre-Training

Pre-training is the initial phase in the creation of a GPT model and is founded on the key idea of knowledge transfer. During this stage, the model is trained on large amounts of untagged textual data. This is where GPT learns linguistic, semantic, and contextual patterns at a deep level. This process is carried out using an autoregression task, where the model predicts the next word in a sentence based on previous words (Vaswani et al., 2017). The attentional mechanism present in the transformer-like architecture allows the model to capture long-term relationships in the text, which enables it to generate coherent and contextually relevant responses.

GPT pre-training involves optimizing millions of parameters in the model using optimization algorithms such as stochastic gradient descent. Key hyperparameters, such as learning rate and transformer architecture, play a critical role in this process (Vaswani et al., 2017). The importance of tuning these hyperparameters appropriately to achieve successful pretraining is worth noting.

Fine-Tuning of GPT

After the pre-training phase, the GPT model is enriched with deep language knowledge. However, to adapt the model to specific tasks, such as generating text in a particular domain or answering specific questions, fine-tuning is necessary. During this phase, the model is trained on a set of labeled data relevant to the specific task to be addressed.

Tuning involves adjusting the weights of the pre-trained model using the task-specific dataset. Tuning involves a smaller learning rate compared to pre-training and usually requires fewer iterations because the knowledge previously acquired by the model is more specialized.

When approaching tuning, it is critical to choose the right dataset and design an effective evaluation strategy. The evaluation metrics must be aligned with the objectives of the task. For example, in sentiment analysis, metrics such as precision, recall and score could be considered. Ensuring adequate validation and test sets are also essential to avoid overfitting (Radford et al., 2019).

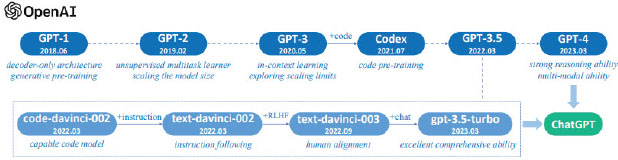

5.1.3. The Evolution of GPT

The following is a review of the evolutions of the GPT models developed by OpenAI, leading up to GPT-4. The GPT series of models represents one of the most significant advances in the field of artificial intelligence, specifically in natural language processing (NLP), see Figure 3.

Figure 3. A brief illustration of the technical evolution of the GPT series models (OpenAI, 2023).

GPT-1

Release: June 2018 by OpenAI.

Architecture: Transformer by Vaswani et al. (2017).

Parameters: 117 million.

Technical details: Uses a transformer architecture with 12 layers, 12 attention heads, and 768 hidden units.

Major contribution: Introduction of a transformer architecture with prior training for natural language processing tasks (Radford et al. 2018).

GPT-2

Release: February 2019 by OpenAI.

Parameters: 1.5 billion.

Technical details: GPT-2 featured an expansion in scale, being ten times larger than its predecessor. The model was trained on a diverse and larger corpus. 48 layers, 1600 hidden units, and 25.6 billion parameters in its largest version.

Major contribution: demonstrated that large-scale language models can generate consistent, high-quality text across paragraphs, which was useful for various applications and machine translation (Radford et al. 2019).

GPT-3

Release: June 2020 by OpenAI.

Architecture: GPT-2 architecture extension.

Parameters: 175 billion.

Technical Details: GPT-3 further extended the scale and featured several architecture and training improvements. Trained on a variety of tasks without the need for specific tuning.

Major Contribution: Highlighted the ability to perform “few-shot learning,” where the model can learn tasks with only a few examples. This model has been used in a wide range of applications, from content writing and editing to programming and user interface design, showing unprecedented versatility in the field (Brown et al. 2020).

Table 2 shows learning sizes, architectures, and hyperparameters.

Table 2. Sizes, architectures and learning hyperparameters (batch size in tokens and learning rate) of the models.

Model Name |

nparams |

nlayers |

dmodel |

nheads |

dhead |

Batch size |

Learning Rate |

GPT-3 Small |

125M |

12 |

768 |

12 |

64 |

0.5M |

6.0 × 10−4 |

GPT-3 Medium |

350M |

24 |

1024 |

16 |

64 |

0.5M |

3.0 × 10−4 |

GPT-3 Large |

760M |

24 |

1536 |

16 |

96 |

0.5M |

2.5 × 10−4 |

GPT-3 XL |

1.3B |

24 |

2048 |

24 |

128 |

1M |

2.0 × 10−4 |

GPT-3 2.7B |

2.7B |

32 |

2560 |

32 |

80 |

1M |

1.6 x I0-4 |

GPT-3 6.7B |

6.7B |

32 |

4096 |

32 |

128 |

2M |

1.2 × 10−4 |

GPT-3 13B |

13.0B |

40 |

5140 |

40 |

128 |

2M |

1.0 × 10−4 |

GPT-3 175B or “GPT-3” |

175.0B |

96 |

12288 |

96 |

128 |

3.2M |

0.6 × 10−4 |

CODEX

Release: A variant of GPT-3, designed specifically for scheduling tasks (Zaremba & Brockman, 2021).

Architecture: Based on the transformer architecture and is a variant of GPT-3 designed specifically for scheduling tasks.

Parameters: 175 billion parameters.

Technical details: Pre-trained on a large corpus of source code and documentation. It is primarily used to generate code and assist in programming tasks.

Major contribution: It has proven to be able to generate functional code in multiple programming languages. Its release has revolutionized the way developers interact with code, providing a powerful tool for automatic code generation and programming assistance (OpenAI, 2023).

5.2. ChatGPT, GPT-4

Language models based on the transformer architecture have undergone rapid development in recent years, culminating in the series of generative pre-trained transformer (GPT) models developed by OpenAI. In particular, GPT-4 and ChatGPT represent significant advances in the field of natural language understanding and generation, respectively. These models are the follow-up to the aforementioned models and have been, over the last few months, the beginning of a new era in text generative AI models. Both models are based on the transformer architecture, which has proven to be highly efficient for natural language processing tasks (Vaswani et al., 2017).

CHATGPT (GPT-3.5)

ChatGPT is a language model developed by OpenAI and is based on the GPT (Generative Pre-trained Transformer) architecture. It is a tuned variant of GPT-3 designed specifically for conversations and chat tasks. The model has been trained on a large corpus of Internet texts, but it is not known which specific documents were used in its training dataset. It has been designed to perform tasks ranging from creative text generation to programming and technical problem solving. This model is freely accessible through ChatGPT (openai.com).

Release: ChatGPT was released by OpenAI in 2021 according to Nakano et al. (2021).

Architecture: ChatGPT is also based on the transformer architecture and is a variant of GPT-3 optimized for conversations.

Parameters: ChatGPT has 175 billion parameters, similar to GPT-3.

Technical details: It is pre-trained on a corpus of conversations and text. Designed for chatbots and interactive conversational systems.

Key Contribution: ChatGPT has improved consistency and contextualization in conversations compared to previous models.

GPT-4

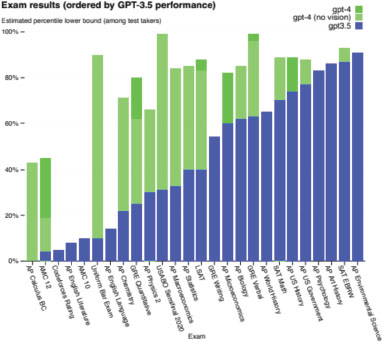

GPT-4 is the fourth iteration of the GPT series and represents a quantum leap in terms of capabilities, limitations, and associated risks. With a yet undisclosed number of parameters, GPT-4 has shown significant improvements in text generation, contextual understanding, and adaptability to various tasks. This model is available in the paid version of OpenAI called ChatGPT Plus, which includes certain restrictions of the GPT-4 model and new tools available such as plugins (OpenAI, 2023), code interpreter and custom instructions according to OpenAI (2023), Figure 4 shows the different work contexts.

Figure 4. Performance on scholastic and occupational assessments. For every test, we mimic real examination environments and grading systems. The assessments are arranged in ascending order according to the performance of GPT-3.5. GPT-4 surpasses GPT-3.5 in the majority of the evaluated exams (OpenAI, 2023).

The plugins allow developers to add specific functionality to the model, such as web search, language translation and querying scientific databases. The publication stresses that these plugins are designed to be secure and reliable and undergo a rigorous review process before approval.

Release: The technical report on GPT-4 was published by OpenAI in March 2023.

Architecture: GPT-4 is a model based on the transformer architecture that can accept image and text inputs and produce text outputs.

Parameters: The report does not specify the exact number of parameters but highlights that the model is large scale.

Technical details: GPT-4 is pre-trained to predict the next token in a document.

The post-training alignment process results in improved performance on measures of factuality and adherence to desired behavior. Infrastructure and optimization methods were developed that behave predictably over a wide range of scales.

Major contribution: GPT-4 is a large multimodal model (accepting image and text inputs, emitting text outputs) that, while less capable than humans in many real-world scenarios, exhibits human-level performance on several professional and academic benchmarks (OpenAI, 2023).

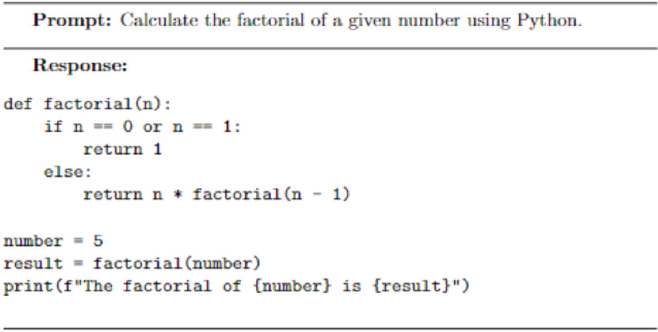

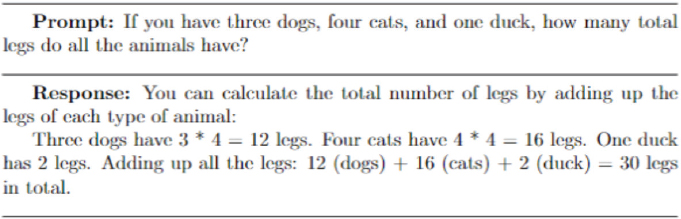

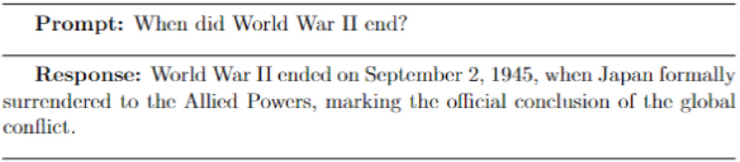

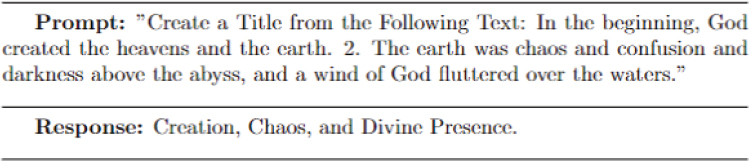

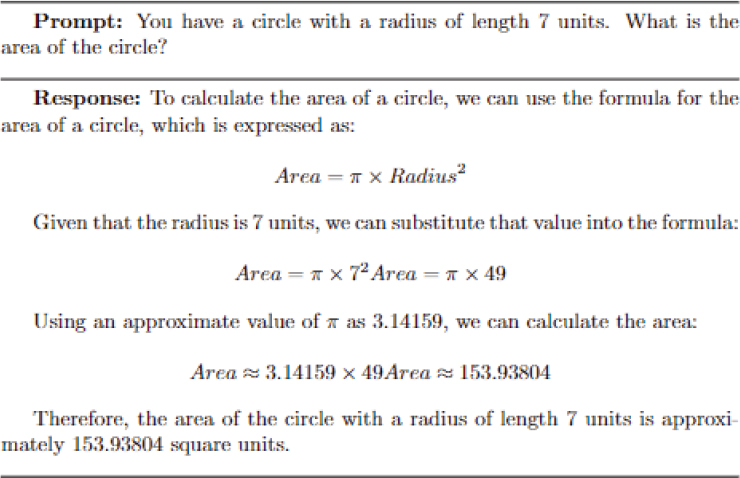

5.3. Prompts

Prompts are instructions, questions or statements designed to invoke a specific response from a natural language processing (NLP) model, such as OpenAI’s GPT models. In the context of chatbots and other PLN applications, prompts serve as initial inputs that guide the model in generating a coherent and relevant response. Their role is critical in controlling and directing the interaction with the model, influencing the quality, accuracy, and context of the generated response.

5.3.1. Strategies for Establishing Good Prompts

• Clarity and precision: A good prompt should be clear and precise, avoiding ambiguities that may lead to confusing or incorrect answers (Reiter et al., 2020).

• Contextualization: Including the necessary context helps the model understand the intent behind the query, improving the relevance of the response (Chen et al., 2019).

• Use of examples: In few-shot learning, providing examples within the prompt can help the model understand the intended task (Brown et al., 2020).

• Iterative experimentation: Iterative experimentation and adjustment of the prompt allows to fine-tune the interaction and obtain optimal responses (Wallace et al., 2019).

• Ethical considerations: Prompts should be formulated with awareness of potential biases and comply with privacy and ethics regulations (Hovy & Spruit, 2016).

Prompts play a central role in the design of and interaction with generative language models and other PLN applications. Their formulation and management involve a combination of technical, linguistic, and ethical considerations. The literature in PLN offers a wide spectrum of research and techniques related to the effective use of prompts, and their study and application continue to be a vital area in the interface between humans and artificial intelligence systems.

5.3.2. Types of Prompts and Their Uses

• Informative prompts: Designed to request specific information, they are useful in applications such as data search and virtual assistants (Manning et al., 2008).

• Interrogative prompts: Formulated as questions, they are employed to invoke detailed responses in areas such as customer support and tutoring (Jurafsky & Martin, 2019).

• Instructional prompts: Used to direct the model to perform a particular task, such as translation, summarization, or creative content generation (Reiter & Dale, 2020).

• Contextual prompts: Include additional context to guide the model’s response in complex scenarios, such as ongoing dialogues or specialized tasks (Serban et al., 2017).

• Comparative prompts: Designed to solicit comparisons, analyses, or evaluations between entities or concepts.

• Code generation prompts: Used in programming environments to automatically generate code snippets, such as in OpenAI Codex (OpenAI, 2021).

• Linguistic assistance prompts: Applied in tools for grammar correction, translation, and other linguistic services, such as Grammarly or Google Translate.

5.3.3. Example: Code Generation

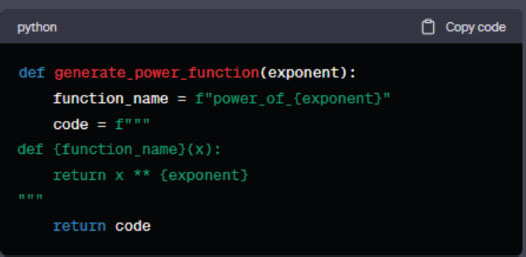

Automatic code generation is a technique used to produce source code programmatically. This practice is especially useful when a large amount of similar or repetitive code needs to be generated. Instead of manually writing each piece of code, developers can use code generators to automate the process. In this article, we will explore how code can be generated automatically with Python and present a simple example of code generation for creating mathematical functions, see Figures 5, 6, 7.

Why Generate Code Automatically?

Efficiency: Reduces the time required to write code.

Consistency: Ensures that the generated code follows a specific pattern.

Flexibility: Allows global changes in the code with minimal effort.

Example of code generation in Python

Automatically generate Python functions that calculate the square, cube, and fourth power of a given number.

First, let’s define a Python function that takes an exponent as a parameter and returns the source code of a function that raises a number to that exponent.

Figure 5. Python exponent function.

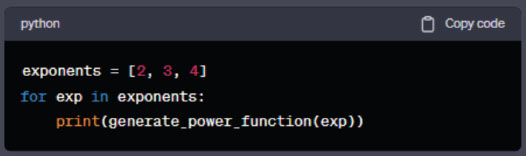

Generating Functions

Now, we will use this function to generate code for functions that calculate the square, cube and fourth power of a number.

Figure 6. Python list function.

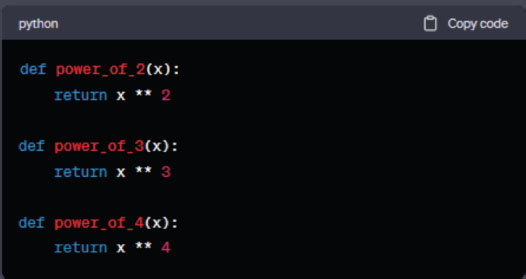

The generated code looks like this:

Figure 7. Python math function.

The generate_power_function function takes an exponent and returns a code snippet that defines a new Python function. This new function takes a parameter x and returns x raised to the provided exponent.

The for loop in the “generating functions” segment runs through a list of exponents. For each exponent, generate_power_function is called to generate the corresponding code.

Automatic code generation is a powerful technique that can save time and effort, especially when dealing with repetitive or similar code. The presented example is quite simple, but code generation is used in much more complex applications, including frameworks and software libraries.

5.4. Guidelines and Documentation

5.4.1. Ethics and Responsible Use

ChatGPT is generally accessed through an API provided by OpenAI, which requires authentication to ensure the security and integrity of interactions with the model, is highly versatile and can be implemented in a variety of applications, from customer service chatbots to virtual assistants and decision-making systems. The API allows for a wide range of customizations, including the ability to define specific prompts or instructions to guide the model’s behavior in different contexts. In addition, OpenAI provides detailed documentation covering technical aspects such as authentication, prompt structuring and interpretation of the responses generated by the model (OpenAI, 2021).

Access to ChatGPT is not limited only to interaction through the API, it is also accessible through a web interface provided by OpenAI. This web interface provides an intuitive and easy-to-use means of interacting with the model without requiring advanced technical knowledge. Users simply enter their prompts or questions in a text box, and the model generates answers that are displayed in the same interface. This method of access is especially useful for non-technical users or for those who wish to test the model’s capabilities without having to integrate it into a larger application or system. In addition, the web interface often includes additional features, such as the ability to adjust parameters such as temperature and maximum response length, which provides greater control over text generation (OpenAI, 2021).

It is crucial to keep ethical and responsible use guidelines in mind when interacting with ChatGPT, especially in applications that may have significant social or cultural implications. OpenAI provides specific guidelines to address these issues, including the prevention of plagiarism and the generation of inappropriate content.

5.4.2. Ethics and Responsible Use

The documentation also addresses ethical and responsible use issues. This includes guidelines on the prevention of plagiarism, the generation of inappropriate content, and consideration of the social and cultural implications of using large-scale language models. These models, trained on large datasets, have the potential to generate content that may be discriminatory, biased, or even dangerous (Hao, 2020; Bender et al., 2021). Therefore, it is imperative to address ethical issues from a multidisciplinary perspective that includes both technical and social aspects.

One of the most pressing challenges is the inherent bias in training data, which can perpetuate existing stereotypes and biases (Caliskan et al., 2017). Researchers are exploring methods to mitigate these biases, such as parameter adjustment and reweighting of training data (Zhao et al., 2018).

Transparency in the operation of models and traceability of their decisions are fundamental to the ethical use of AI. This is especially relevant in critical applications such as healthcare and the judicial system, where a misguided decision can have serious consequences (Doshi-Velez et al., 2017).

Responsible use involves implementing safeguards, such as content moderation systems and alerts for inappropriate content. It is also crucial to educate users about the limitations of these models and how to interpret their responses critically (McGregor et al., 2020).

Ethics in AI is a rapidly developing field that requires continued collaboration between engineers, ethicists, legislators, and other relevant stakeholders to ensure that the technology is used in a way that is beneficial to society as a whole.

5.4.3. Applications in Computer Programming and Education

OpenAI Codex has demonstrated significant performance on typical introductory programming problems. Its performance was compared to that of students taking the same exams, showing that Codex outperforms most students. In addition, we explored how Codex handles subtle variations in problem wording, noting that identical input often leads to very different solutions in terms of algorithmic approach and code length. This study also discusses the implications that such technology has on computer science education as it continues to evolve (Finnie-Ansley et al., 2022).

Using OpenAI Codex as a large language model, programming exercises (including sample solutions and test cases) and code explanations were created. The results suggest that most of the automatically generated content is novel and sensible, and in some cases is ready to be used as-is. This study also discusses the implications of OpenAI Codex and similar tools for introductory programming education and highlights future lines of research that have the potential to improve the quality of the educational experience for both teachers and students (Sarsa et al., 2022).

GitHub Copilot, powered by OpenAI Codex, has been evaluated on a dataset of 166 programming problems. It was found to successfully solve about half of these problems on its first attempt and solved 60% of the remaining problems using only natural language changes in the problem description. This study argues that this type of prompt engineering is a potentially useful learning activity that promotes computational thinking skills and is likely to change the nature of code writing skill development (Denny et al., 2022).

5.4.4. Applications in Academic Publishing

ChatGPT is seen as a potential model for the automated preparation of essays and other types of academic manuscripts. Potential ethical issues that could arise with the emergence of large language models such as GPT-3, the underlying technology behind ChatGPT, and their use by academics and researchers are discussed, placing them in the context of broader advances in artificial intelligence, machine learning, and natural language processing for academic research and publication (Lund et al., 2023).

The release of the model has led many to think about the exciting and problematic ways in which artificial intelligence (AI) could change our lives in the near future. Considering that ChatGPT was generated by fine-tuning the GPT-3 model with supervised and reinforcement learning, the quality of the generated content can only be improved with additional training and optimization. There are a number of opportunities, as well as risks associated with its use, as the inevitable implementation of this disruptive technology will have far-reaching consequences for medicine, science, and academic publishing (Homolak, 2023).

It also appears to be a useful tool in scientific writing, assisting researchers and scientists in organizing material, generating an initial draft and/or proofreading. Several ethical issues are raised regarding the use of these tools, such as the risk of plagiarism and inaccuracies, as well as a possible gap in accessibility between high-income and low-income countries (M. Salvagno et al., 2023).

5.4.5. Conversational Systems

Chatbots are being applied in various fields, including medicine and healthcare, for human-like knowledge transfer and communication. In particular, machine learning has been shown to be applicable in healthcare, with the ability to manage complex dialogues and provide conversational flexibility. This review focuses on cancer therapy, with detailed discussions and examples of diagnosis, treatment, monitoring, patient support, workflow efficiency, and health promotion. In addition, limitations and areas of concern are explored, highlighting ethical, moral, safety, technical, and regulatory issues (Xu, L. et al., 2021).

Machine learning (ML) is a study of computer algorithms for automation through experience. The application of ML in healthcare communication has proven to be beneficial to humans. This includes chatbots for health education in COVID-19, cancer therapy, and medical imaging. The review highlights how the application of ML/AI in healthcare communication is able to benefit humans, including complex dialogue management and conversational flexibility (Sarkar Siddique & James C. L. Chow, 2021).

This article provides an overview of the opportunities and challenges associated with using ChatGPT in data science. It discusses how ChatGPT can assist data scientists in automating various aspects of their workflow, including data cleaning and preprocessing, model training, and results interpretation. It also highlights how ChatGPT has the potential to provide new insights and improve decision-making processes (Hossein Hassani & E. Silva, 2023).

5.4.6. Risk

This technology has shaken the foundations of many industries based on content generation and writing, so it is advisable to discuss good practices for writing scientific articles with ChatGPT and other artificial intelligence language models (A. Castellanos-Gómez, 2023). There are different sources of opinion as to the use of this technology and how it should be done.

These language models show significant advances in reasoning, knowledge retention, and programming compared to their predecessors. However, these improvements also bring new security challenges, including risks such as the generation of harmful content, misinformation, and cybersecurity. Despite mitigation measures, similar limitations remain in the model as in its previous versions, such as the generation of biased and unreliable content. In addition, its increased consistency could make the generated content more credible and, therefore, potentially more dangerous (OpenAI, 2023).

The risks to be considered in the use of these models are listed. As always, the person who makes use of these tools will ultimately be responsible, since, to date, a thorough supervision of the generated content is more than necessary to corroborate it:

• Misinformation.

• Harmful content.

• Damage to the performance, assignment, and quality of service.

• Disinformation and influence operations.

• Proliferation of conventional and non-conventional weapons.

• Privacy.

• Cybersecurity.

• Interactions with other systems.

• Economic impacts.

• Excessive dependence.

According to Gao et al. (2023) the scientific abstracts generated by ChatGPT were compared with the original abstracts using an artificial intelligence output detector, a plagiarism detector, and blind peer review. The results showed that the ChatGPT-generated abstracts were clearly written, but only 8% followed journal-specific formatting requirements. Although the generated abstracts were original with no detected plagiarism, they were often identified by using an AI output detector and by skeptical human reviewers. The conclusion is that ChatGPT writes credible scientific abstracts, however, it also raises ethical issues and challenges regarding the accuracy, completeness, and originality of the generated content.

6. Llama 2

Large language models (LLM) have proven to be very promising at the enterprise level. This is the case of the Meta company, which within its visions on artificial intelligence has sought its decentralization, where organizations can customize their virtual assistants and open-source models can be trained with expert knowledge. That is why Meta, a leader in the innovation and technology market headed by Mark Zuckerberg, has partnered with Microsoft Azure to present its generative AI tool Llama 2 (Touvron, H et al., 2023), considered a rival to ChatGPT and Bard differentiating itself by being an open source and not closed product where such LLMs are largely adjusted to human preferences placing security and private information at stake.

6.1. What is Llama 2 and what Are Its Features?